Sequential Decision Making under Uncertainty

Reading group (2007)

Past meetings:

| Date |

Topic |

Presenter |

Material |

| Monday November 26, at 16:00 |

Decentralized POMDPs |

Matthijs |

TBD |

| Monday October 29, at 16:00 |

Reinforcement Learning on a Stochastic Timed Discrete-Event system |

Gonšalo Neto |

paper |

| Monday October 15, at 16:00 |

Policy gradient methods |

Francisco |

TBD |

| Monday October 1, at 17:00 |

Active perception using POMDPs |

Matthijs |

slides |

| Monday June 18, at 17:00 |

Non-Markovian reinforcement functions |

Valdinei Silva |

Thiebaux et al., JAIR 2006 |

| Monday June 4, at 17:00 |

Bayesian Reinforcement Learning |

Luis Montesano |

Poupart et al., ICML 2006 |

| Monday May 7, at 17:00 |

Reinforcement learning in general state spaces |

Francisco |

slides |

| Monday April 16, at 17:00 |

Constrained MDPs |

Nelson Gonçalves |

slides |

| Monday March 26, at 17:00 |

Multiagent models for partially observable

environments |

Matthijs |

slides |

| Monday March 12, at 17:00 |

Multiagent learning |

Gonçalo Neto |

slides, survey by Gonçalo |

| Monday February 26, at 17:00 |

Predictive State Representations |

Rodrigo Ventura |

slides, paper, web page |

| Monday February 12, at 17:00 |

Partially observable Markov decision processes |

Matthijs |

slides |

| Monday February 5, at 17:00 |

Reinforcement learning |

Francisco |

slides, Q-learning convergence proof |

| Monday January 22, at 17:00 |

Markov Decision Processes |

Matthijs |

slides |

| Thursday January 11, at 10:00 |

Markov Chains |

Francisco |

slides |

| Thursday January 4, at 10:00 |

Introduction |

Matthijs / Francisco |

slides |

Schedule: bi-weekly on Mondays at 16:00.

Location: ISR meeting

room, 7th floor, Torre Norte.

Organisers: Francisco

Melo and Matthijs

Spaan

Announcements are sent to the mailing

list

Goal

What is this all about?

List of potential topics

The purpose of this reading group is to gather on a

regular basis a group of people with interests in

sequential decision making under uncertainty. During our

meetings, we introduce standard references on the

different topics and discuss state-of-the-art research. We

encourage people to suggest additional topics to those

listed below, as well as to come forward and present their

own research on the different areas.

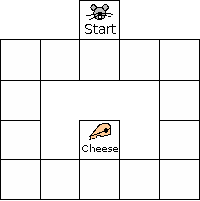

And what do we mean by sequential decision making under

uncertainty? Consider the following scenario.

An intelligent mouse must move about in the maze so as to

get the cheese as quickly as possible. At each square, it

must choose whether to move up, down, left or right.

There is some uncertainty in the outcome of the actions of

the mouse. For example, it may happen that, sometimes,

the action "Move left" the mouse two squares to the left

instead of just one. On the other hand, the mouse must try

to realize in which square of the maze it is by observing

its surroundings. Of course, there are many squares that

look alike, and so the mouse isn't always able to tell

exactly where it is...

This is a typical single agent sequential decision

problem. The agent (mouse) must sequentially choose

an action among its possible actions (left, right, up,

down) so as to optimize some criterion (in this case,

reach the cheese as quickly as possible). The actions

usually have uncertain outcome and there is some

perceptual aliasing involved (the mouse cannot always

accurately perceive its position on the maze).

A common model to address a situation as the one above

is called partially observable Markov decision

process. In our meetings, we discuss this and other

models used to address this kind of problems, as well as

classical and state-of-the-art solution techniques

(i.e., methods used to determine the "best" choice of

actions). We will also address problems with multiple

agents (as an example, consider our mouse scenario once

again, and suppose that there are several mice,

each trying to be the first one to reach the cheese).

A more detailed list of possible topics addressed in our

meetings can be found below.

Within the topic of sequential decision making several

subtopics can be addressed:

- Markov chains: main properties; usefulness as

a basic building block of more complex models (MDPs,

Markov games, POMDPs, etc.);

- Stochastic approximation algorithms: basic

algorithms, convergence, applications in RL;

- Markov decision processes: notation,

properties and standard solution techniques (VI, PI,

etc);

- Reinforcement learning: standard

algorithms such as TD(lambda), SARSA, Q-learning,

actor-critic, etc.

- Advanced RL algorithms: DYNA,

E3, prioritized sweeping, parti-game,

RTDP, hierarchical RL, Bayesian Q-learning, etc.;

- Generalization in RL:

TD(lambda) with linear

function approximation, interpolation-based

Q-learning, Q-learning with soft-state

aggregation, Baird's residual algorithms, kernel-based

RL, LSTD, etc.;

-

Implementation issues: Approximation

architectures (tile-coding, kernel-based, convex

interpolation), optimistic initialization,

exploration/exploitation issues, eligibility traces;

- Partially observable

Markov decision processes: main properties and

standard solution methods (Sondik's two-pass, Chen's

linear support, witness, incremental pruning, etc.);

- Approximate solutions to

POMDPs: Heuristic-based (Q-MDP, entropy-based,

etc.), Matthijs' sampling-based VI, Baxter's

gradient descent methods, reinforcement learning

methods.

- Predictive state representations: main idea,

applications and algorithms;

- Markov games: notation, properties and game

theoretic approaches;

- Multi-agent reinforcement

learning:

Minimax-Q, FF-Q, Nash-Q, multi-agent DYNA-Q,

correlated-Q, etc.

- Coordination:

Fictitious play, coordination graphs, social conventions,

adaptive play, etc.

- Partial observability in multi-agent problems: POSGs,

Dec-POMDPs, I-POMDPs;

- Solution methods for POSGs: Hansen's DP,

Montemerlo's BaGA, etc.

|