Project funded by the Portuguese Science Foundation, FCT PTDC/EEA-CRO/105413/2008, Jan.2010 - Dec.2012

Introduction | References | Publications | Reports and Milestones | People | Private | Contact

Early experiments in psychological research, such as the famous experiments with the prism and the inverted glasses [Kohler62, Snyder52, Stratton96], revealed that much of the geometry of the human’s vision is known beforehand and is saved in the brain as imaging experiences. In particular, those psychological experiments showed that a person wearing distorting glasses for a few days, after a very confusing and disturbing period, could learn the necessary image correction to restart interacting effectively with the environment.

This amazing learning capability of the human’s vision system clearly contrasts with current calibration methodologies of artificial vision systems, which are still strongly grounded to the a priori knowledge of the projection models. Usually the imaging device is assumed to have a known topology and in many cases to have a uniform resolution. This knowledge allows detecting and localizing edges, extrema, corners and features in uncalibrated images, just as well as on calibrated images. In short, it is undeniable that locally, and for many practical purposes, an uncalibrated image looks just like a calibrated image.

This project departs from traditional computer vision by exploring tools for calibrating general central imaging sensors using only data and variations in the natural world. In particular we consider discrete cameras as collections of pixels, photocells, organized as pencils of lines with unknown topologies. Note that distinctly from common cameras, discrete cameras can be formed just by some sparse, non regular, sets of pixels.

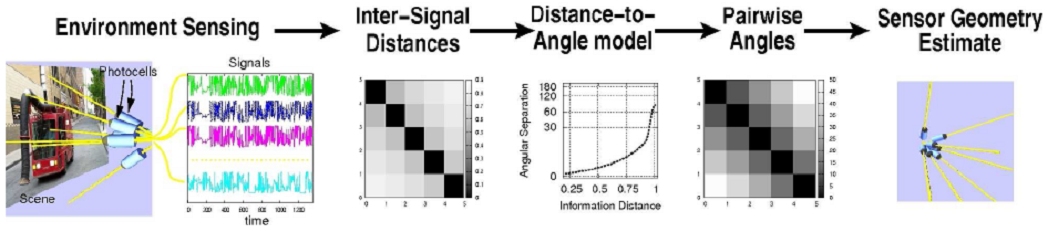

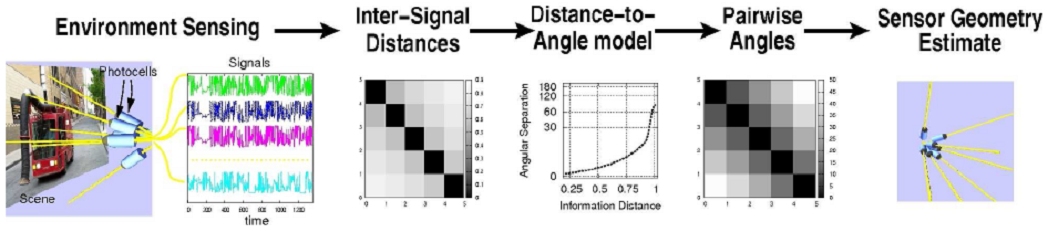

The main idea is that photocells that view the same direction of the world will have higher correlations. As distinct from many conventional calibration methods in use today, calibrating discrete cameras requires moving them within a diversified (textured) natural world. This project follows approaches based on information theory and computer learning methodologies.

Most single camera calibration methodologies rely on geometrical features (e.g. points, lines) registered between known patterns and the image plane [Tsai87, Bouguet08]. However, the need to use known calibration patterns and specialized feature detection and matching algorithms, makes calibration impractical in many situations. In this project we take an alternative approach and use a different kind of data, namely the brightness (or colour) values captured along time by each photocell, i.e. the so called pixel-streams. The advantages of this approach are exemplified by our recent initial research [selfRef07, selfRef08] in this new area.

We showed that pixel-streams have enough information to calibrate discrete cameras (provided that there is motion isotropy and enough texture in the scene). The advantages are clear: works for a large variety of cameras and requires no specific calibration patterns or procedures. There are however some key challenges that need to be addressed in order to expand the research capacity in the area. In this project we address the question of how much can one relax the isotropy and centrality conditions, while keeping the calibration accuracy within some predefined bounds. These challenges constitute principal aspects in the calibration of a network of cameras.

The implications of this project encompass a more theoretical understanding on the statistics of natural images. In a long term, understanding the statistical properties existing in the visual world is expected to lead to continuous self-calibration mechanisms as e.g. found in the human beings, which are able along their lives to continuously adapt to different prescriptions of glasses. In a short term, besides the scientific advances, we will demonstrate a practical application where a mobile camera can classify automatically its lens based only on the pixel-streams.

The proposed calibration methodology for a discrete camera, comprises three main steps, namely learning a functional relation (table) between pixel angles and the information distance of the pixel-streams, then inverting this table, i.e. obtaining a table that gives for each information distance an inter-pixel angle, and finally finding the distribution of the pixels in a 2-sphere using a 3D embedding algorithm.

The project encompasses four principal tasks: calibration of single discrete cameras; comparison of the proposed calibration methodology with other methodologies; developing a lens / optics classification application; calibrating a network of cameras.

A number of contributions are expected in this project, namely: novel techniques for estimating information distances between pixel streams (still an open issue); characterization of the effect of relaxing the motion isotropy into the precision and accuracy of the calibration; novel methods for embedding the pixels over a 2-sphere and estimating the topology of an unknown discrete camera; methodology for the estimation of the baselines between discrete cameras.

| José António Gaspar,

Tech. Univ. of Lisbon / IST / ISR Etienne Gregoire Grossmann, TYZX Corporation José Santos Victor, Tech. Univ. of Lisbon / IST / ISR |

Francesco Orabona, Università degli Studi di Milano Plinio Moreno López, Tech. Univ. of Lisbon / IST / ISR Ruben Martinez Cantin, Centro Universitario de la Defensa |

|

Prof. José Gaspar Instituto de Sistemas e Robótica, Instituto Superior Técnico, Torre Norte Av. Rovisco Pais, 1 1049-001 Lisboa, PORTUGAL |

Office: Torre Norte do IST, 7.19 phone : +351 21 8418 293 fax : +351 21 8418 291 www : http://www.isr.ist.utl.pt/~jag e-mail: |

Institute for Systems and Robotics , Computer and Robot Vision Lab