Introduction

Several aspects of humans interactions with large decentralized robotic systems engaged in information gathering activity are described. The term Active Sensor Network (ASN) used in this work refers to a fully decentralized network of sensors some or all of which have actuators and are, in that sense, active.

Sensor networks which are based on decentralized architecture are scalable, robust, and modular. For an ASN, both the data fusion and the control parts must be decentralized and scalable. For an ASN with a human operator in the loop, the human-robot interface must also be designed with scalability in mind.

The primary goal of this study is to investigate the conditions under

which the human involvement will not jeopardize scalability of the overall

system. The nature of human-robot interaction in any multi-robot system is

two-fold:

a) how to present the user with a global view of the

system which is likely to be decentralized; and

b) how to

influence the actions of many information gathering robots.

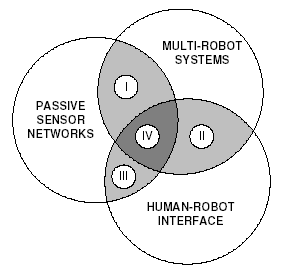

Figure 1. The field of human-robot interface

This work lies at the intersection of the three related fields of sensor networks, multi-robot systems and human-robot interface as shown graphically in Figure 1.

Human Role in Decentralized Data Fusion

Data fusion in general refers to the process of combining observations from multiple and dissimilar sensors into a global consistent view of the world. Decentralized data fusion must, in addition, satisfy other constraints which are beyond this overview. One of the challenges in designing an ASN is in how to present the human operator with a global picture of the world in a decentralized system in such a way that the GUI does not become the communication or processing bottleneck.

In broad terms, a network can be queried for two types of information: information about the environment and information about the components of the network itself. It is important to note that the former does not increase in size with the growth of the network and the latter does.

Human Role in Decentralized Control

Some or all network components in an ASN may have actuators. The control objective is typically to maximize the total information gain realized by the network, subject to certain state or control constraints. Modelling of platforms, sensors and environment as a set of continuous states, together with the use of information as payoff, allows the information acquisition problem to be formulated as a standard optimal control problem.

Several classification systems for human-robot interactions have been suggested. For example, Scholtz identifies mechanic, supervisory, and peer-to-peer levels of human-robot interaction. We suggest to group possible options into two broad categories: state-based and information-based which are examined in more details below.

State-based control can be a direct type of control where a sequence of actions is sent through the network addressed to the components which possess the attributes specified. This approach is a direct extension to teleoperation. Another mode in this category is the mode switch type of control where the components operate autonomously most of the time, but are periodically commanded to switch from one pre-programmed action policy to another. Furthermore, there is a payoff change type of control where the change in the utility function is sent to network components.

Under the information-based control mode, the information about the environment which has become available from outside of the network is entered into the network by a human operator. It is then fused into the system and will affect action of one or more network of the components.

State-based control faces the problem of scalability as it suffers from increased communication traffic and a human operator is limited in the ability to provide assistance simultaneously to several robots. Larger number of robots require more assistance. One solution is to increase the number of operators, another is to increase the level of autonomy exercised by the robots. The goal of this work is to examine architectures which allow growth to arbitrary size which precludes, at current stage, the use of techniques related to adjustable autonomy. Full autonomous operation is therefore assumed and only information-based control is used.

Experiments

Experiments have been conducted using the existing active sensor network consisting of mobile platforms (Pioneer II) and stationary sensors (SICK laser range finder). The network has been extended by a stationary Graphical User Interface (GUI) node and a mobile GUI node implemented on an iPaq PDA.

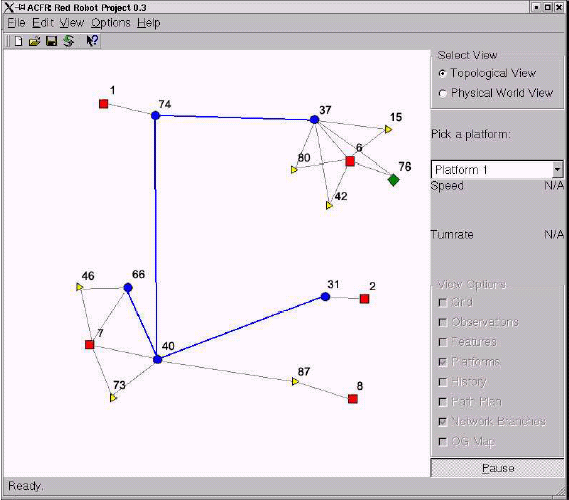

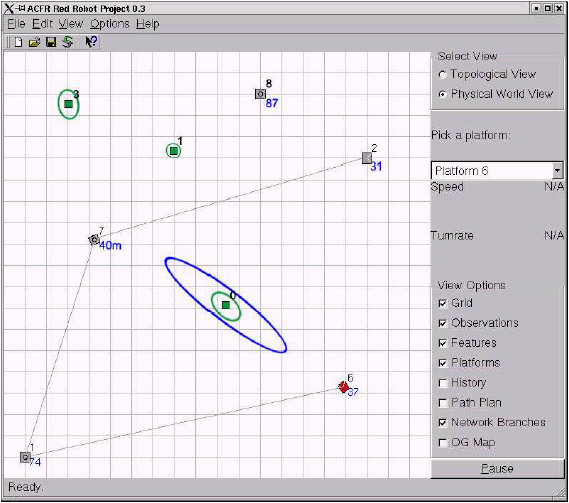

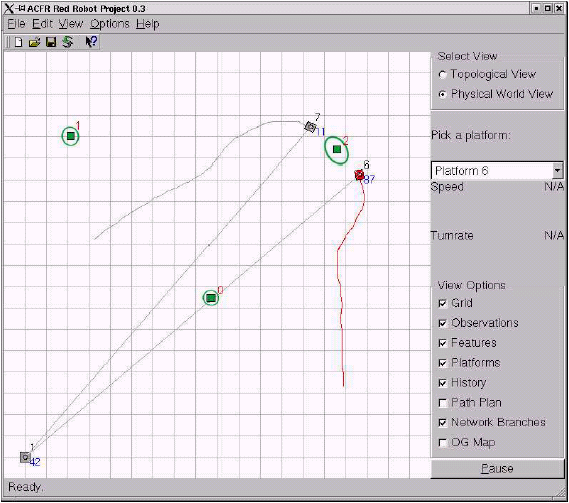

The first objective of a GUI is to provide the human controller with a view into the functioning of an ASN. Figure 2 and 3 display two different views of the state of the network: the network topology is shown in Figure 2 and the physical layout of the network is shown in Figure 3.

Figure 2: Topological view of the Active Sensor Network (ASN)

Figure 3: Physical view of the Active Sensor Network (ASN)

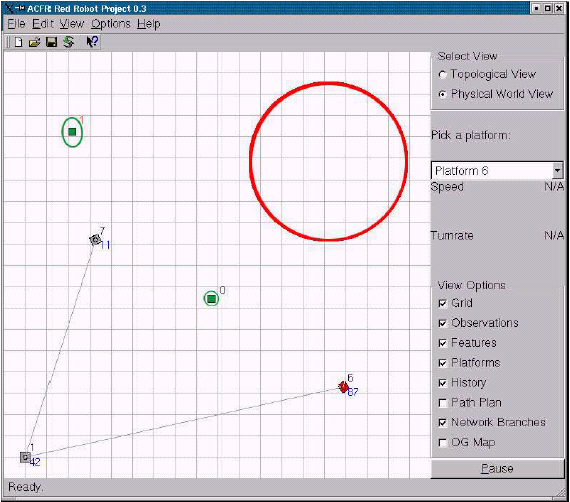

The second purpose of the GUI is to provide an opportunity for a human operator to influence the decision making inside an ASN. The control mission is to track features observed by a robot or a human. The human operator can identify the likely location of a feature currently unknown to the network and draw a circle representing the uncertainty of his or her observation, see Figure 4. The information enters the DDF network and is treated like any other sensor information. The response of the two mobile robots to that human-entered information is shown in Figure 5, they converged onto the feature and the uncertainty decreased.

Figure 4: The operator enters a feature unknown to the sensor network

Figure 5: The active nodes respond to its presence by converging onto it

Conclusions

- Human involvement is an integral part of the operation of an ASN and making it scalable is crucial to the overall scalability of the system.

- If the data fusion architecture is decentralized then connecting an operator GUI to it is a straight- forward procedure.

- The decentralized control techniques demonstrated in this paper are a natural extension of the DDF architecture. This work shows that, just like in the area of data fusion, the techniques based in information space and not state space, are more decentralizable and scalable. That means that, in terms of scalability, providing the network with additional information is preferable to issuing commands to the network components.

- While the architecture allows to connect an arbitrary number of human operators to the network with read and write access, the human factors related to maintaining the integrity and consistency of the network data may present a certain challenge.

Future Work

- Validate information-based robot control on a larger sensor network.

- Examine the extend to which the adjustable autonomy methods can be made scalable

- Apply current techniques to other domains: outdoor vehicles, flight platforms, underwater, etc.