Octomap

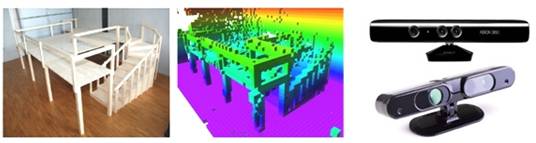

by K. Wurm et al, MS Kinect

MSc dissertation proposal 2013/2014

Integrating Color-Depth Images into World Representations

Introduction:

Recent vision sensors, namely the Microsoft Kinect [MS-Kinect] comprising not only video but also depth

information, promise to be sensors capable to provide the required high quality

data allowing to construct from scratch complex scene representations. This MSc

project proposal is precisely concerned with designing scene representations

integrating video and depth information.

Objectives:

The objectives of this work are twofold: (i)

Building scene

representations encompassing multiple data acquisitions done with

the Kinect camera, and (ii) Creating fast browsing

methodologies of the scene representations.

Detailed description:

Creating scene representations is nowadays facilitated by the recent

introduction in the market of the MS Kinect camera

[MS-Kinect] which provides not only visual

information of the scenario, but also depth information (3D information).

Integrating this 3D information is still an issue due mainly to the large

amounts of data involved and the required (large) computational resources.

Some recent research works show that by choosing the proper scene

representations it is possible to do data acquisition, processing and

integration with a standard PC. One such reference is the work by Wurm et al [Wurm10], where a mobile robot maps a number of

campuses. Another reference is the work by Shen et al

[Shen11], where a flying robot (quadrotor) maps a

multi-floor scenario using a Kinect camera (click here

to see a video).

The referred works give conceptual starting points and software

libraries which facilitate doing experiments. This constitutes therefore the

starting point of the MSc project. Some other aspects involve doing data

acquisition and displaying the resulting scenarios. An interesting point to

explore involves using the acquired data for navigation experiments.

In summary, the work is organized in the following main steps:

1) data acquisition, implies moving the robot within the

scene and capturing scene information

2) data integration into a single scene representation

3) demonstration of a virtual tour inside the

scene representation or trajectory following with the robot

References:

[HRI2007] - 2nd ACM/IEEE International Conference on

Human-Robot Interaction, http://hri2007.org/

[Gaspar00] Vision-based Navigation and Environmental

Representations with an Omnidirectional Camera, Josť Gaspar, Niall Winters,

Josť Santos-Victor, IEEE Transaction on Robotics and Automation, Vol 16, number 6, December 2000

[ISR-galery] Some images of

unicycle type robots at ISR: see labmate, scouts, pioneers, ... in "Mini galeria

de fotografias de projectos

@ IST/ISR", http://users.isr.ist.utl.pt/~jag/infoforum/isr_galeria/

[MS-Kinect] http://en.wikipedia.org/wiki/Kinect

[Wurm10] Kai M. Wurm, Armin Hornung, Maren Bennewitz, Cyrill Stachniss, Wolfram Burgard,

"OctoMap: A Probabilistic, Flexible, and Compact

3D Map Representation for Robotic Systems", in Proceedings of the ICRA

2010 Workshop on Best Practice in 3D Perception and Modeling

for Mobile Manipulation, 2010, http://www.informatik.uni-freiburg.de/~wurm/papers/wurm10octomap.pdf

[Shen11] Shaojie Shen, Nathan Michael, and Vijay Kumar ,

"3D Indoor Exploration with a Computationally Constrained MAV", ICRA

2011. See also video in http://www.youtube.com/watch?v=cOeCZDBHrJs&feature=channel_video_title

Expected results:

At the end of the work the students will have enriched their knowledge

in:

* Computer vision

* Building scene representations

Observations:

--

More MSc dissertation

proposals on Computer and Robot Vision in:

http://omni.isr.ist.utl.pt/~jag